- ChatGPT is the most popular AI app in the enterprise and Google Bard is the fastest-growing AI app in the enterprise, both by a large margin.

- Source code is posted to ChatGPT more than any other type of sensitive data, at a rate of 158 incidents per 10,000 enterprise users per month.

- DLP and user coaching are the most popular types of controls enterprises use to enable AI app use while preventing sensitive data exposure.

With all the hype surrounding ChatGPT and AI apps in general, it is unsurprising that scammers, cybercriminals, and other attackers attempt to exploit the hype for illicit gains.

The hype and popularity of ChatGPT draw the attention of attackers and scammers because of the large target pool and potential for profit, combined with the varied proficiency of users on the platform.

ChatGPT and other AI apps are on their way to becoming mainstays in the enterprise sector.

Over the past two months, according to Netskope Threat Labs, the percentage of enterprise users accessing at least one AI app each day has increased by 2.4 per cent weekly, for a total increase of 22.5 per cent over that period.

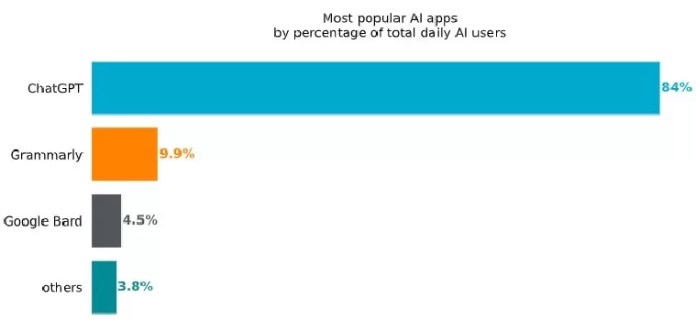

The most popular enterprise AI app by a large margin is ChatGPT, with more than eight times as many daily active users as any other AI app. The next most popular app is Grammarly, which focuses exclusively on writing assistance.

Bard, Google’s chatbot, comes in just below Grammarly. All other AI apps combined (of which we are tracking more than 60, including Jasper, Chatbase, and Copy.ai) are less popular than Google Bard.

At the current growth rate, the number of users accessing AI apps will double within the next seven months.

Over the same time, the number of AI apps in use in the enterprise held steady, with organisations with more than 1,000 users averaging 3 different AI apps per day, and organisations with more than 10,000 users averaging five AI apps per day.

At the end of June, 1 out of 100 enterprise users interacted with an AI app each day.

For every 10,000 users, an organisation can expect around 660 daily prompts to ChatGPT. But the real question lies in the content of these prompts: Are they harmless queries, or do they inadvertently reveal sensitive data?

A Netskope study revealed that source code was the most frequently exposed type of sensitive data, with 22 out of 10,000 enterprise users posting source code to ChatGPT per month. In total, those 22 users are responsible for an average of 158 posts containing source code per month.

For every 10,000 enterprise users, an enterprise organisation is experiencing approximately 183 incidents of sensitive data being posted to the app per month. Source code accounts for the largest share of sensitive data being exposed.

Immediate concern

Despite the potential opportunities and threats, AI brings to humanity, organisations worldwide and their leaders are dealing with a more immediate concern: How can they use AI apps safely and securely?

An outright block on AI applications could solve this problem but would do so at the expense of the potential benefits AI apps offer.

For organisations to enable the safe adoption of AI apps, Ray Canzanese, Threat Research Director at Netskope Threat Labs, said that they (organisations) must centre their approach on identifying permissible apps and implementing controls that empower users to use them to their fullest potential, while safeguarding the organisation from risks.

“It is inevitable that some users will upload proprietary source code or text containing sensitive data to AI tools that promise to help with programming or writing. Therefore, organisations must place controls around AI to prevent sensitive data leaks. Controls that empower users to reap the benefits of AI, streamlining operations and improving efficiency, while mitigating the risks are the ultimate goal.”

Multifaceted challenge

Such an approach should include data loss prevention (DLP), domain filtering, URL filtering, and content inspection to protect against attacks and interactive user coaching.

Blocking access to AI-related content and AI applications is a short-term solution to mitigate risk, but comes at the expense of the potential benefits AI apps offer to supplement corporate innovation and employee productivity.

“As security leaders, we cannot simply decide to ban applications without impacting on user experience and productivity,” James Robinson, Deputy Chief Information Security Officer at Netskope, said.

“Organisations should focus on evolving their workforce awareness and data policies to meet the needs of employees using AI products productively. There is a good path to safe enablement of generative AI with the right tools and the right mindset.”

Safely enabling the adoption of AI apps in the enterprise is a multifaceted challenge as it involves identifying permissible apps and implementing controls that empower users to use them to their fullest potential while safeguarding the organization from risks.

Ultimately, more organizations are likely to adopt DLP controls and real-time user coaching over time to enable the use of AI apps like ChatGPT while safeguarding against unwanted data exposure.

Steps to securely use AI tools:

- Regularly review AI app activity, trends, behaviours, and data sensitivity, to identify risks to the organisation.

- Block access to apps that do not serve any legitimate business purpose or that pose a disproportionate risk. A good starting point is a policy to allow reputable apps currently in use while blocking all others.

- Use DLP policies to detect posts containing potentially sensitive information, including source code, regulated data, passwords and keys, and intellectual property.

- Employ real-time user coaching (combined with DLP) to remind users of company policy surrounding the use of AI apps at the time of interaction.

- Block opportunistic attackers attempting to take advantage of the growing popularity of AI apps by blocking known malicious domains and URLs, and inspecting all HTTP and HTTPS content.

- Use Remote Browser Isolation technology to provide additional protection when there is a need to visit websites in categories that can present a higher risk, like newly observed and newly registered domains.

- Ensure that all security defences share intelligence and work together to streamline security operations. Netskope customers can use Cloud Exchange to share IOCs, import threat intel, export event logs, automate workflows, and exchange risk scores.

Related posts:

- World Economic Forum launches AI Governance Alliance focused on responsible Generative AI

- G42 ties up with Cerebras to launch world’s largest supercomputer for AI training

Discover more from TechChannel News

Subscribe to get the latest posts sent to your email.